|

Area-Efficient Near-Associative Memories on FPGAs

Udit Dhawan and André DeHonProceedings of the 21st ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, pp. 191--200, (FPGA, February 11--13, 2013)

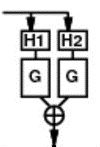

Associative memories can map sparsely used keys to values with low latency but can incur heavy area overheads. The lack of customized hardware for associative memories in today's mainstream FPGAs exacerbates the overhead cost of building these memories using the fixed address match BRAMs. In this paper, we develop a new, FPGA-friendly, memory architecture based on a multiple hash scheme that is able to achieve near-associative performance (less than 5% of evictions due to conflicts) without the area overheads of a fully associative memory on FPGAs. Using the proposed architecture as a 64KB L1 data cache, we show that it is able to achieve near-associative miss-rates while consuming 6-7X less FPGA memory resources for a set of benchmark programs from the SPEC2006 suite than fully associative memories generated by the Xilinx Coregen tool. Benefits increase with match width, allowing area reduction up to 100X. At the same time, the new architecture has lower latency than the fully associative memory -- 3.7ns for a 1024-entry flat version or 6.1ns for an area-efficient version compared to 8.8ns for a fully associative memory for a 64b key.

- Author's local PDF copy of paper - dmhc_fpga2013.pdf

- ACM DL Version DOI: 10.1145/2435264.2435298

- Source distribution release of associated code.

N.B. An expanded, journal version appears in TRETs.

|

|||||||||